Connected Vehicles for Urban Environments

By: Prof. Yassine Ruichek

Systems and Transportation laboratory (SET)

Research Institute on Transports, Energy and Society (IRTES)

University of Technology of Belfort-Montbéliard (UTBM)

During the past decades, connected vehicles or intelligent vehicles have seen more and more attention and development efforts from research societies and the industry community. Nowadays, a car driver is assisted by several onboard technologies, called advanced driver assistance systems (ADAS), in order to reduce accident risk and, hence, allowing the driver to anticipate and adapt his behavior when potentially dangerous situations occur. ADAS usually consists of adaptive cruise control (ACC), lane departure warning system, collision avoidance system, automatic parking, traffic sign recognition, road map based navigation system with path optimization integrating traffic state, etc.

In order to stimulate the development of intelligent vehicles, the American Department of Defense has organized three autonomous driving competitions: DARPA Grand Challenge in 2004 [1] and 2005 [2], DARPA Urban Challenge in 2007 [3]. The Chinese government organized similar intelligent vehicle competitions starting in 2009 [4]. Recently, Google released its first driverless car in May 2012 [5]. Up to September 2012, three U.S. states (Nevada, Florida and California) passed laws permitting driverless cars. The industry has also increased the number of prototypes on roads.

IRTES–SET (Research Institute on Transports, Energy and Society – Systems and Transportation laboratory) at UTBM (University of Technology of Belfort- Montbéliard) is working on developing the concept of connected vehicles, considering in particular, urban driving environments where the constraints are different from those encountered in highway environments. Recent work concerns developing methods and techniques for improving perception and localization for intelligent vehicles. An example of experimental platform in IRTES-SET is shown in Fig. 1.

Fig. 1. SET Car experimental platform equipped with different sensors (cameras, LRFs, GPS, IMU, etc.)

Fig. 1. SET Car experimental platform equipped with different sensors (cameras, LRFs, GPS, IMU, etc.)

Vehicle environment perception

Concerning perception, we propose a dynamic occupancy grid map for representing the vehicle environment sensed by a stereo vision system and a lidar [6]. In order to augment the semantic meanings, the occupancy grid map incorporates object movement and recognition information. At first, the two sensors are calibrated by placing a common calibration chessboard in front of the stereoscopic system and 2D lidar, and by considering the geometric relationship within the cameras in the stereoscopic system [6, 7]. This calibration method integrates also the sensor noise models and Mahalanobis distance optimization for more robustness. A stereo vision based visual odometry is then proposed by comparing several different approaches of image feature detection and feature points association. After a comprehensive comparison, a suitable feature detector and a feature points association approach is selected to achieve better performance in stereo visual odometry [6]. Next, independent moving objects are detected and segmented by the results of visual odometry and U-disparity image [6, 8]. Then, spatial features are extracted by a kernel-PCA method and classifiers are trained based on these spatial features to recognize different types of common moving objects e.g. pedestrians, vehicles and cyclists [6, 8].

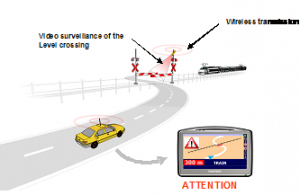

In the framework of PANsafer (towards safer level crossings) project, which was supported by French ANR agency, we proposed a system allowing to alert users approaching a level crossing and operates when a dangerous situation occurs within the level crossing. In this work, we explored the possibility of implementing a smart video surveillance system for detecting and evaluating abnormal situations induced by users (pedestrians, vehicle drivers, unattended objects) in level crossings [9]. The system starts by detecting, separating and tracking moving objects in the level crossing. Then, a Hidden Markov Model is developed to estimate the ideal trajectory for each target allowing it to discard dangerous situations. Ideal estimated trajectories are next analyzed to instantly evaluate the level of risk of each target by using Dempster-Shafer data fusion technique. The analysis allows also recognize hazard scenarios (obstacle within the level crossing, stopped vehicles on the line, vehicle zigzagging between closed half barriers, etc.). The video surveillance system is connected to a communication system (WAVE), which takes the information on the dynamic status of the LC and sends it to users approaching the level crossing or operators. The whole approach is illustrated in Fig. 2.

Vehicle localization

Connected vehicles need also systems capable of estimating absolute or relative vehicle positions and orientations at all times. From map provider’s point of view, localization is also one of the key points in geo-referencing process for mobile mapping systems. GPS (Global Positioning System) receiver can provide absolute position of an equipped vehicle. It has been considered as a basic sensor for vehicle localization in outdoor environments for its advantage of high localization precision in a long distance.

However, as GPS signals are affected by atmospheric conditions, satellite distribution, radio signal noises, etc., localization accuracy of GPS receivers in a short distance is only to a few meters. In some specific locations of urban environments (e.g., streets with tall buildings around, tunnels), information provided by the GPS receivers might not be accurate or even unavailable due to signal reflection or poor satellite visibility. In case of GPS reflection, pseudo-ranges provided by the received reflected signals would be longer than the real ranges. If these contaminated ranges are used for position estimation, localization results would be erroneous. In order To augment the localization precision and robustness, a popular approach is to combine different types of data coming from different sources. Within a sensor fusion framework, we proposed a localization approach by fusing GPS information, data acquired from a lidar, a stereo vision system and a gyrometer, and data extracted from a 2D GIS (Geographical Information System), using a Bayesian formalism [10, 11]. Data redundancy is also used for detecting sensor fault and then allows reconfiguring the multi-sensor system based only on coherent data.

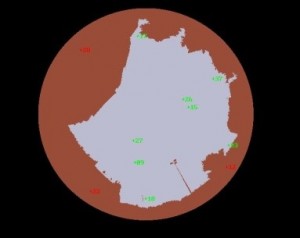

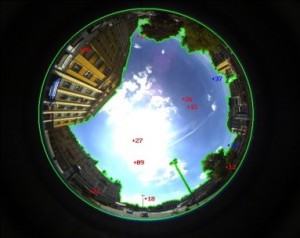

We are also working on the characterization of the GNSS signals environment reception in order to identify state of each satellite with respect to GPS receiver (blocked, direct, indirect). For this purpose, a fisheye camera is mounted near to the GPS receiver and oriented to the sky. The images are segmented and classified into two classes (sky and non-sky), by combining color and texture information [12]. In the first step, satellites are projected on the image and a position is estimated using only satellites projected in sky region (see Fig. 3), i.e. LOS (Line of Sight) satellites for which signals are received via a direct path [12]. In the second step, the objective is to model multi-path phenomena by reconstructing the environment around the receiver using fisheye stereo vision. This work is developed in the framework of CAPLOC project, supported by the French PREDIT program.

Fig. 3. Image segmentation/classification and satellite projection

References

[1] http://archive.darpa.mil/grandchallenge04/

[2] http://archive.darpa.mil/grandchallenge05/

[3] http://archive.darpa.mil/grandchallenge

[4] http://baike.baidu.com/view/4572422.htm

[5] http://en.wikipedia.org/wiki/Google_driverless_car

[6] Y. Li, Stereo vision and Lidar based dynamic occupancy grid mapping: Application to scenes analysis for intelligent vehicles, PhD thesis, UTBM, France, December 2013.

[7] Y. Li, Y. Ruichek and C. Cappelle, Optimal Extrinsic Calibration between a Stereoscopic System and a Lidar, in IEEE Transactions on Instrumentation & Measurements 2013.

[8] Y. Li, Y. Ruichek, Moving Objects Detection and Recognition Using Sparse Spatial Information in Urban Environments, in Proc. of IEEE Intelligent Vehicles Symposium (IVS), Alcala de Henares, Spain, 2012.

[9] H. Salmane, Y. Ruichek, L. Khoudour, A Novel evidence based model for detecting dangerous situations in level crossing environments, In Journal of Expert Systems with Applications, 2013.

[10] L. Wei, C. Cappelle, Y. Ruichek, Camera/Laser/GPS fusion method for vehicle positioning under extended NIS based sensor validation, In IEEE Trans. on Instrumentation & Measurement, 2013.

[11] L. Wei, C. Cappelle, Y. Ruichek, Horizontal/vertical LRFs and GIS Maps aided vehicle localization in urban environment, In Proc. of International Conference on Intelligent Transportation Systems, The Hague, The Netherlands, 2013.

[12] J. Marais, C. Meurie, D. Attia, Y. Ruichek, A. Flancquart, Towards accurate localization in guided transport: Combining GNSS and imaging information, in Transportation Research Part C: Emerging Technologies, 2013.

Yassine RUICHEK received respectively his Phd in control and computer engineering and Habilitation à DIriger des Recherches (HDR) in physic science from the university of Lille, France, in 1997 and 2005. He was an assistant researcher and teacher from 1998 to 2001. From 2001 to 2007 he was an associate professor at the University of Technology of Belfort-Montbeliard, France. Since 2007, he is a full professor and conducts research activities in multi-sources/sensors fusion for environment perception and localization, with applications in intelligent transportation systems. He participated and participates at several projects in these areas. His research interests are computer vision, data fusion and pattern recognition. Since 2012, he is the head of the Systems and Transportation Laboratory (SET) of the Research Institute on Transportation, Energy and Society (IRTES).

Yassine RUICHEK received respectively his Phd in control and computer engineering and Habilitation à DIriger des Recherches (HDR) in physic science from the university of Lille, France, in 1997 and 2005. He was an assistant researcher and teacher from 1998 to 2001. From 2001 to 2007 he was an associate professor at the University of Technology of Belfort-Montbeliard, France. Since 2007, he is a full professor and conducts research activities in multi-sources/sensors fusion for environment perception and localization, with applications in intelligent transportation systems. He participated and participates at several projects in these areas. His research interests are computer vision, data fusion and pattern recognition. Since 2012, he is the head of the Systems and Transportation Laboratory (SET) of the Research Institute on Transportation, Energy and Society (IRTES).

About the Newsletter

Editors-in-Chief

Jin-Woo Ahn

Co-Editor-in-Chief

Sheldon Williamson

Co-Editor-in-Chief

TEC Call for Articles 2023 - Advances in Charging Systems

The TEC eNewsletter is now being indexed by Google Scholar and peer-reviewed articles are being submitted to IEEE Xplore.

To submit an article click here.